The Community is now in read-only mode to prepare for the launch of the new Flexera Community. During this time, you will be unable to register, log in, or access customer resources. Click here for more information.

- Flexera Community

- :

- Community Hub

- :

- Flexera Engineering Blog

- :

- Carving out a module from the monolith

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Subscribe

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Subscribe

- Printer Friendly Page

This is not going to be your typical abstract microservices article quoting some Martin Fowler. Rather, I'll try to walk you through some practical solutions around extracting one capability of "the Monolith" out into a microservices based component.

These days, Engineering at Flexera is busy growing the next gen architecture, code-named One Flexera, that leverages Event Streaming and Microservices patterns in a brand new Go codebase. Our existing capabilities, currently spread across independent products, many of them monolithic applications, will become capabilities of the new architecture, taking advantage of a set of shared components that can scale horizontally.

While One Flexera is a long-term endeavor, stakeholders are eager to see some early value out of this modernization effort. We identified features of existing products that are good candidates to being rebuilt as components using the new tech stack. The new component will be designed in such a way that it's compatible with both the legacy product and the new One Flexera architecture. To plug it in to the monolith, you often need to create a data path loop, out and back into the monolith, through your redesigned component. This back-porting needs to deal with a tricky impedance mismatch: make an Event Streaming component work with a SQL based application.

CDC

Here comes Change Data Capture to the rescue. Most of the mature relational databases offer some variant of CDC. What it does is watch the changes in the designated tables and create a stream of events out of this changes. This is done in an efficient way, like using the database's transaction log (the WAL).

For the component developed by our team, Allium, we investigated a few options including Debezium/Kafka. For reasons like performance and operational cost, we eventually decided to build our own adapter. We're building it on top of Change Tracking feature of SQL Server and Redis in-memory DB. We basically query the CT tables and store events in Redis. The reason we can get away with a volatile database is that we're just building an adapter here, the reference persistence is already in SQL. A cold restart for our specific data volume is quick enough. To understand this better, let's dive into the details a bit.

At this point I assume you are familiar with Kappa vs Lambda architecture (if not, you can start here). While not guaranteed to fit anywhere, a Kappa architecture is the desired one for reasons of simplicity. Here are some cases where Kappa might fit:

- where the computation only consumes latest diffs (events representing changes in the dataset)

- where it works with a time window of events, say, the last 30min

- where rebuilding the full state by replaying all meaningful historical events can be done efficiently

Log Compaction

For the last category, and this is where our component falls into, the keyword is "meaningful". For example consider a case where you need to compute an aggregate on top of a customers table. You will have a succession of events like "user 1 created", "user 1 updated", "user 1 disabled". And later, maybe even "user 1 deleted". You can see why, at least for some computations, when you play the history, to represent the final state of the user record, you only need one event describing the final state of the user. You could replace all historical events with just one. This concept is called log compaction. The size of the compacted log that you need to persist is capped at about the size of your full state - that is the size of your old relational database.

Real-time Log Compaction

Some streaming platforms (Kafka) have a log compaction feature. There are background threads continuously working on cleaning the tail of the log.

Another choice, and this is what we choose to do in our adapter, is to keep only the last state of each record, as deltas come in. In parallel, we also keep a short log, history of events. The state section serves cold starts. A fresh subscriber will not provide an offset to start from. Therefore it will receive the full state as events, followed by the events that entered the log while streaming the state, followed by any future events. All this startup process needs to be well thought to avoid race conditions. An existing subscriber that maybe lost connection for a short time, for example due to a temporary network issue, will use the offset to restart from the corresponding log position. If that position is no longer in the log, remember, we only keep a short history, the event server will do a cold start, that is stream the full state. That's why you don't need to keep a log that is longer than the time needed for a cold start.

Our Adapter

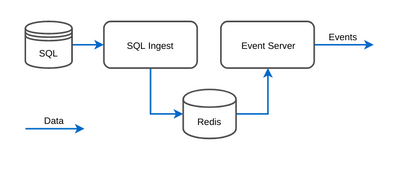

The adapter is made up of 2 microservices: SQL Ingest and the Event Server.

SQL Ingest listens to CT in SQL and builds the 2 sections in Redis: state and log. It also builds indexes for the filtered versions of these - we decided we need to support filtered subscriptions. Fortunately, indexes in Redis are very efficient. We also use transaction pipelines in Redis to ensure data consistency.

The second microservice exposes a streaming gRPC API that allows subscribing to events. The events are reconstructed from Redis and streamed over gRPC. The performance turned out to be pretty good. With client and server both written in Go and using AWS Elastic Cache for Redis, we see rates of over 100k events per second for a single server worker. We actually had to throttle the consumption of events at the subscriber - it was consuming all available CPU, making the microservice unresponsive and prompting Kubernetes to kill it.

In the end, it's not so surprising this CDC Event Server performs that well. It's practically a glorified cache, a cache with event streaming semantics.

Slice Controller

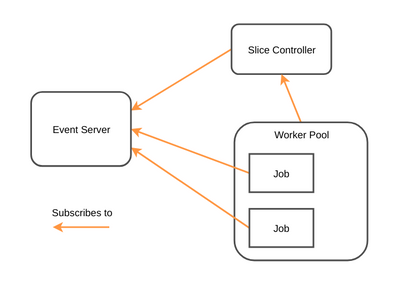

Now that we have the data stream flowing in from the monolith, just like it will be coming in from the future One Flexera components, we can look at what we do with it. The current implementation of the feature we try to extract already has some optimizations. It's done in memory, as a queue of batch jobs, one job per tenant. But they are slow and resource hungry.

In order to optimize this processing we looked at how connected the data is: is there a way to split it into independent islands that could be computed in parallel and potentially more efficiently? It turns out that we can, and so this function became a microservice. We call the islands Slices hence the service is Slice Controller. The Slice Controller only subscribes to the tables that are needed to identify slices. It then exposes the slice list for each tenant as a streaming gRPC, to be consumed by the Worker Pool. The worker pool creates jobs for each Slice and it's these jobs that do the actual weightlifting.

The architecture that we ended up with for this component is now deployed in a staging environment, working with real-world data. There's still work to do with scaling up, reducing redundant event subscriptions, optimizing the worker pool and optimizing the algorithm of the jobs themselves. But the new component is not only performing much better, it is also able to compute a more accurate result using advanced algorithms that were simply too heavy for the old system.

To end, probably stating the obvious, going microservices comes with deep paradigm shifts. From eventual consistency to owning the full CI/CD. It might feel intimidating at times, but the excitement of working with cutting edge tech makes up for it.

Victor Dramba, Team Allium, Program Architecture Owner.